This is the third post of the Cambridge SupTech Lab blog series “Artificial intelligence (AI) in financial supervision: Promises, pitfalls, and incremental approaches”. In the first post of the series, we explored opportunities for AI in financial supervision. In the second post, we further discussed the need for a “human in the loop” and the complexities of integrating AI into existing supervisory systems, including the ethical considerations, data privacy issues, and the ever-present challenge of balancing innovation and regulation. In this third post, we double-click on Generative AI, a subfield of AI that’s becoming ubiquitous in conversations around tech, financial services, and increasingly in financial supervision.

The field of AI might fairly be characterized as a series of hype cycles. As Maciej Cegłowski suggests, the hype often overshadows the substance of AI, especially for its implications in finance.

We’ve collectively drifted through the peaks and valleys of machine learning, hidden Markov models, artificial neural networks, embeddings, deep learning, recurrent networks, transformers, and beyond, at each step arguably correcting our inflated expectations, climbing the slope of enlightenment, before reaching a state of commoditization and productivity.

Most recently, the focus has been on Generative AI and its promise to redefine financial supervision. The term Generative AI encompasses a family of powerful models that are defined by their ability to, “generate new content — such as text, images, and videos — by employing algorithms that allow machines to learn patterns, extrapolate from existing data, and generate novel outcomes based on different inputs or ‘prompts.’” More technically speaking, these might include models such as generative adversarial networks (GANs) or generative pre-trained transformers (GPTs).

However, like every rose, Generative AI also has its thorns.

Generative technologies have interestingly elicited powerful reactions that range from dismissive (“glorified autocomplete”) to manageable risks (“an intellectual property problem”) to alarmist (a threat “more urgent” than climate change).

Practical disclaimers shared early on by some of the most prominent minds in this space highlight clearly expressed limitations to the models and our understanding of them. The rush to put these systems into production and be the first to seize on the promise of outsized value may introduce unnecessary risk into the system.

In this blog post, we cover Generative AI through the lens of financial supervision. We hope to de-hype the conversation within this space and serve as an antidote to alarmism, fatalism and religiosity that too often accompany the hype cycles of AI.

So, how does generative AI work?

Generative AI is a form of Artificial Intelligence that can generate text, images, or other types of media all in response to a simple ‘prompt’. These systems learn from patterns and structure of their training data, finally presenting an inspiring piece of new data. Notable examples include chatbots like ChatGPT by OpenAI and Bard by Google, along with AI systems like Stable Diffusion, Midjourney and DALL-E.

Generative models such as Hidden Markov models and Gaussian Mixture models have been integral to machine learning and AI since the 1950s. The evolution of these models has led to advancements like Variational Autoencoders, Generative Adversarial Networks (GAN) and the Generative Pre-trained Transformer, culminating in the 2023 release of GPT-4, considered by some to be an early form of artificial general intelligence.

Companies like Microsoft, Google, Baidu, and a legion of startups are betting big on this revolution. Yet, as with every revolution, concerns are raised – in this case, for example, over the real and potential misuse of generative AI systems in fabricating deceptive deep fakes or creating and disseminating misinformation that can be hard to detect.

Large Language Models (LLMs) like OpenAI’s GPT series are a critical aspect in the world of Generative AI. They are built using machine learning techniques and their main purpose is to generate text that could be written (or said) by a human.

How do they do it?

They are trained on an array of data from the internet and use that data to learn the art of predicting what comes next in a piece of text. This allows these tools to whip up fresh content. They can be useful, aiding in anything from writing help, tutoring, drafting emails, or even coding. This comes with an important caveat, though: Their outputs are simply echoes of the data they were trained on.\

While they are extremely useful, having a “human in the loop” is critical.

Generative AI systems can operate with a variety of input types – or modalities – including text, code, images, molecules, music, video, robotic actions, and even multimodal solutions that can combine two or more of these. Notably, transformer architecture has become central in many generative models across different modalities, giving birth to models capable of generating eloquent sentences and lifelike images. Examples include BERT and GPT for text, Vision Transformers and Swin Transformers for images, and CLIP for multimodal tasks.

Despite historical limitations of generative models in generating complex and diverse data, advancements in the field have led to significant improvements in image generation, language modeling, and other tasks. The current landscape reflects a convergence of historical modeling techniques, deep learning, and transformer architecture to generate high-quality, diverse outputs in various fields.

Generative AI is inescapable. It has progressed from being a mere possibility to a pervasive reality. The transformational potential across sectors is significant. Ignoring its influence could result in obsolescence. To remain competitive, organizations should act proactively and incorporate AI into their strategies. This integration is not merely about survival. It serves as a powerful vehicle for growth.

Responsible deployment and vigilant oversight

These promised benefits of AI are not without trade-offs and responsibilities. As discussed in the second post of this series, there is a growing concern about the ‘black box’ problem with AI. It can be difficult to understand how these models come to decisions. This is a big deal in areas where transparency is crucial, including financial supervision.

To meet this challenge, new systems using GANs have been trained to create user-friendly explanations for AI-powered decisions. For example, this could make applying for a loan application a more transparent process.

As generative AI systems are increasingly commoditized and scaled across financial markets, mindful design of these solutions can mitigate some of the risk. AI can become part of the toolbox for responsible supervision and could become an important tool in mitigating unintended consequences.

Conversely, we are already beginning to see what’s in store if we’re not intentional and mindful when deploying these models. We see access controls breaking down when humans are asked to identify AI-generated objects that don’t exist. We see prompt injection continuing to poison chatbots and be used as a threat vector for information gathering, fraud, intrusion, malware, and manipulated content. While the proof of concept for these exploits focuses on relatively benign topics like tricking an automated job recruiter, one could readily imagine this same approach applied by a malicious supervised entity to skirt LLM-based automated checking of compliance reports.

A “turning point for humanity” – if we get it right

The effect of generative AI in financial services specifically has recently been described as “the screech of dial-up internet.” Deutsche Bank similarly described the implications of generative AI as massive, calling this technology “a turning point for humanity.”

Wall Street has been flirting with generative AI for a while now.

AI chatbots are already offering financial advice. Integrating generative AI into financial supervision has seen some genuine progress. Large language models like BloombergGPT and JPMorgan’s IndexGPT are already revolutionizing how we understand financial news and asset management.

JPMorgan Chase is setting a new standard with the announcement of their AI chatbot, IndexGPT. Though we lack granular insight into the inner workings of IndexGPT, in principle this groundbreaking initiative provides online financial information and investment advice. It is clear that JPMorgan remains all-in on AI and data. The launch of IndexGPT is part of its bigger push using AI across various sectors, especially in finance. As of September 2023, they have over 2000 people working on AI strategies, including focusing on cloud-based systems to make things faster (and cheaper).

Bloomberg is also getting serious about AI with their new model, BloombergGPT. This AI model, trained specifically on financial jargon, can perform many tasks, including sentiment analysis, new classification, and even answering questions. Recognizing that finance needs a dedicated AI, Bloomberg argues that BloombergGPT, with its own complex lexicon, is just that.

Following the trend towards more domain-specific LLMs, BloombergGPT is trained specifically for a wide range of tasks within the financial industry. The model provides the best results on financial benchmarks while still performing competitively on general-purpose benchmarks. It was trained using the largest domain-specific dataset yet, constructed from financial documents collected over 40 years and mixed with public datasets to create a training corpus of over 700 billion tokens.

It is costly to retrain BloombergGPT every month or every week, thus lightweight adaptation is highly favorable. Most recently, a new tool called FinGPT has stepped into this gap. Whereas BloombergGPT trained an LLM using a mixture of finance data and general-purpose data over about 53 days, at a cost of around $3M, FinGPT promises the ability for swift fine-tuning to incorporate new data for less than $300 per fine-tuning. This exponential gains in time and cost efficiency are likely to continue.

Because of the incredible scope and impact of AI in finance, the authorities tasked with mitigating emerging risks must adopt suptech solutions built using similar technologies, while also taking care to minimize the risks. In fact, applications already appear in financial supervision.

The Monetary Authority of Singapore (MAS) has developed an experimental knowledge bot that helps them to navigate summaries of their supervisory knowledge base. This chatbot, based on an open-source large language model, leverages public information along with selected MAS data. A key application of the chatbot helps economists who perform macroeconomic surveillance generate economic updates on specific countries.

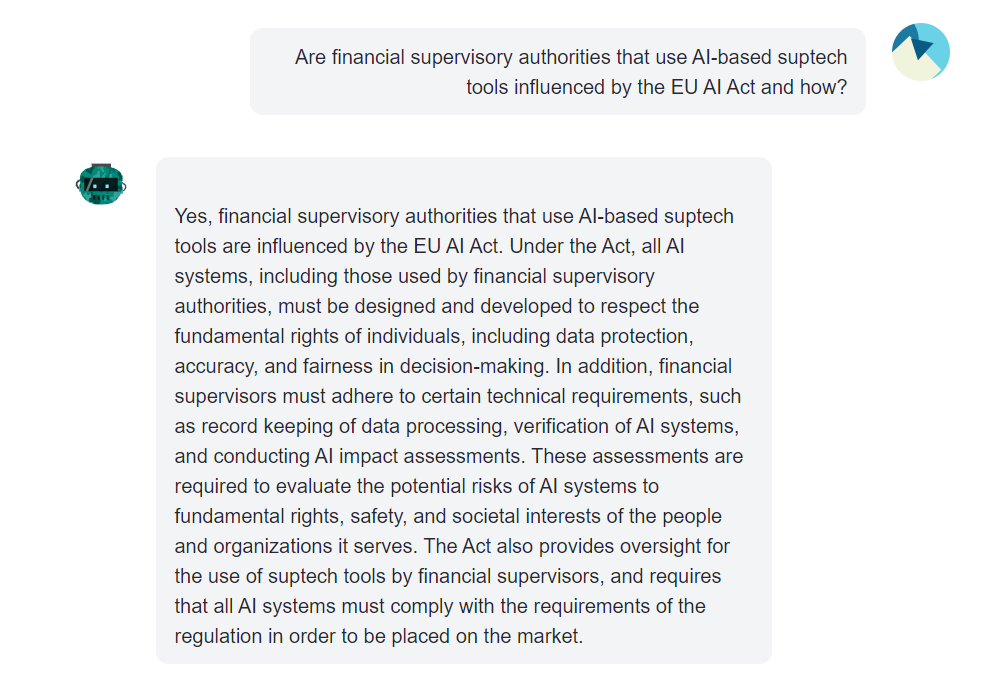

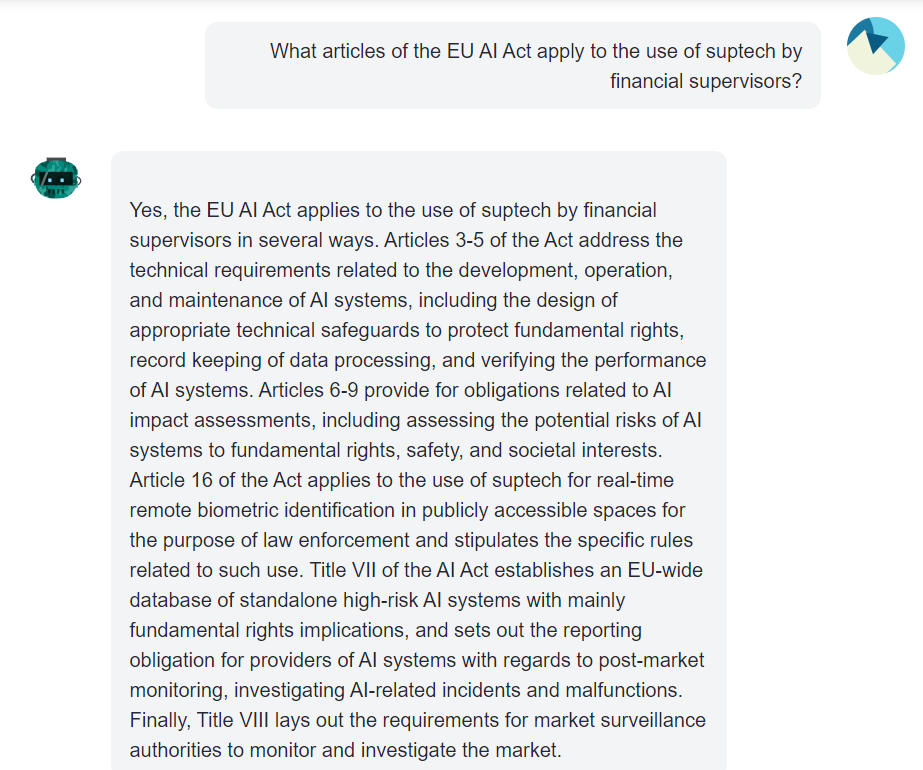

In the legal and policy realm, a GPT-X model has been trained on the contents of the EU’s newly introduced AI Act and can engage in a dialog with the model to understand the implications and nuances of the Act. Examples of prompts and responses related to the suptech space can be seen in the figures below:

An opportunity for financial supervisors

Financial systems are complicated and keeping track of transactions can be a demanding task.

However, generative AI can help.

Monitoring regulatory compliance and risk management through unstructured, text-based reports is time-consuming and error-prone. Collaborating across borders to detect and prevent financial crime is difficult without a common dataset on which to train models.

Imagine a world where lengthy financial documents transform into concise summaries, where raw data becomes easily explained reports with meaningful insights.

The financial supervision capabilities required for each of these cases stand to be enhanced with Generative AI. Properly trained GPT models are proficient at generating human-readable reports from raw data, summarizing the contents of lengthy documents, and generating representative synthetic datasets that can be used as a neutral sandbox for testing other machine learning-based suptech solutions (e.g. the forthcoming Data Gymnasium produced by the Cambridge SupTech Lab).

Another area where generative AI truly shines is automated risk analysis. By leveraging the immense computational power of AI, financial supervisors can analyze large, less-structured datasets in ways that go beyond human capabilities. AI models can sift through these data, identify patterns, and use generative models to create detailed risk analyses based on past data.

Generative AI also has many potential use cases in anti-money laundering supervision. From generating Suspicious Activity Reports (SARs) to spotting patterns in fraud detection and prevention, the possibilities are thrilling.

Customers and financial institutions could be more protected and financial systems could significantly be enhanced. The fight against fraud stands to benefit significantly from generative AI. AI’s capacity to generate predictive models offers an effective means of spotting abnormal activities and identifying potential instances of fraud before they escalate into larger issues.

Combining these opportunities for financial supervision with the potential of cross-border collaboration and data sharing to detect and prevent financial crime with a common dataset on which to train models will lead to a level of efficiency and foresight that was previously unimaginable.

What supervisors need to know about Generative AI

Generative AI is not a free-for-all. There are important ethical and privacy considerations to be aware of.

Generative AI models need to be deployed in a manner that integrates best practices in cybersecurity and data privacy to ensure they don’t inadvertently leak sensitive information. There is a need to comply with privacy laws and regulations, a prerequisite that has even led the Italian regulators to initially ban ChatGPT in Italy. Regulators in that country claimed that its maker, OpenAI, did not satisfy the privacy laws of the country.

Ensuring the accuracy of generated data, dealing with potential biases in algorithms, and managing the privacy and security of financial information are just a few key responsibilities that supervisors will need to consider as we move forward.

With its nearly instantaneous computing capabilities, AI may feel pretty “smart.” However, it is a grave mistake to think of the current systems as conscious or even capable of decision making outside the bounds of their training. For example, these models cannot discern the difference between public and confidential data. This might lead to some sticky situations, like sensitive information popping up in AI responses.

When the Italian regulators hit the brakes on ChatGPT this past March, it was because they felt it wasn’t playing by the rules of privacy laws like GDPR. As a result, OpenAI has been required to spend a lot of effort showing Italian regulators that it’s compliant with GDPR and was reinstated at the end of April 2023.

Privacy concerns with OpenAI might not be over just yet. A lawsuit from a Californian-based law firm against OpenAI claims that the company violated the rights of millions of internet users by using their publicly available online content to train its models, without their consent or compensation. The lawsuit aims to institute legal boundaries on AI training practices and to potentially establish a method for compensating individuals when their data is used.

Another issue that’s worth supervisors’ attention is bias. AI learns from a colossal amount of data, but what if the data it learns from are biased? The AI system could end up playing favorites, too. This is a big deal for financial supervisors, who need to make sure that AI-generated responses are as fair, transparent, and ethical as possible. The trick is in constant monitoring and fine-tuning these models to keep any bias at bay.

AI hallucinations, or more appropriately, AI confabulations, can also pose problems. This occurs when AI comes up with information that it was not explicitly trained on, which can lead to unreliable outputs such as fictional citations, false quotations, and the like.

For example, significant concerns were raised about the credibility of and reliability of Google’s Bard. The tech giant, previously celebrated for its advancements in the AI sphere, has been caught up in a storm of controversy following a series of factual inaccuracies. Bard’s promotional video, marred by a significant error, caused Alphabet’s shares to plummet by 8%. This error cast doubt on the brand’s reputation and stirred a broader public debate on the reliability of generative AI.

Many argue that this is where ‘prompt engineering’ comes into play. It is all about crafting the perfect prompts to guide the AI model and get the output that you want. Prompt engineering is new on the block, so many organizations are still figuring out how to get results.

Yet, the spotlight on prompt engineering may be temporary. Instead, an enduring skill set promises to better leverage AI’s potential: problem formulation. This term describes the capacity to identify, analyze and clearly define problems. Unlike prompt engineering, which primarily focuses on manipulating linguistic elements (by both good and bad actors), problem formulation requires a deep understanding of the problem and the ability to extract meaningful issues from the real-world.

Without a well-defined problem, even the most finely tuned prompts can be ineffective.

The journey with generative AI is a mix of exciting possibilities, ethical puzzles, and practical issues. We must navigate potential pitfalls, keep privacy at the forefront, seek to address data quality and data accuracy, and master the art of prompt-engineering. But despite the challenges, AI will play a key role in strengthening financial supervision and regulatory compliance.

Humans must decide

Though AI comprises an impressive set of capabilities, there must be a ‘human in the loop.’ In sectors like financial supervision, human expertise is indispensable for scrutinizing AI systems, adding context, and making decisions in ambiguous situations.

Human interventions should be considered by supervisors when integrating Generative AI applications, as they prove critical in areas such as risk management and financial crime detection. This is of particular concern for Generative AI applications that produce confabulations (i.e. “hallucinations”) unless carefully trained and curated.

The mere existence of confabulations underscores a critical point regarding AI and financial supervision: the ‘human in the loop’ must decide if AI’s output is reliable.

As we invite AI more into financial supervision, we need to make sure that it behaves properly – meaning that it should stick to the legal and ethical frameworks including data privacy, consumer protection, anti-money laundering laws and regulations.

It is crystal clear that regulatory compliance is a big deal, and a new player is now part of the discussion.

While experts disagree on the EU AI Act’s potential effectiveness, most agree that it serves as an important foundation in the attempt to regulate AI. The proposed regulation sets out minimum standards for all foundation models, introduces specific rules for high-risk scenarios and focuses on collaboration and information exchange along the AI value chain. Everyone involved in AI plays by the same rules and shares information. The Act also makes some headway with rules on Generative AI, like transparency about its use, training data and copyright issues, and content moderation.

Like any draft legislation, it has its fair share of critics. This blog will not dive into the details of the EU AI Act, though, but the ‘ChatGPT Rule’ (Art. 28b (4)) is accused of not going far enough in enforcing transparency and compliance with EU law and being too vague on the use of copyrighted material in training data.

But where does all this leave us in Generative AI in financial supervision?

The EU AI Act could give us a solid framework to regulate AI and manage potential risks. It could set clear rules of the game and foster trust in AI systems. But if the Act becomes too vague, it could stifle innovation. As we embrace generative AI in financial supervision, we need to balance the rules to manage risks and to ensure fairness, transparency and compliance with the need for flexibility and clarity to nurture innovation.

Conclusion

Generative AI is a promising vision. The technology has significant potential to shape the future of financial supervision. Its ability to handle vast amounts of data, detect patterns, and generate insight could revolutionize how financial authorities operate and supervise markets.

We need to exploit this tech without being exploited by it.

As we stride into the future, we should embrace the potential of generative AI while remaining aware of its limitations and potential risks.